Planning with Hierarchical Reinforcement-Learning Agents

Motion Planning Course - Winter 2022 - Professor Dmitry Berenson

I developed a Rapidly-Exploring Random-Tree (RRT) algorithm that used the intermediate layers of a Hierarchical-Actor-Critic (HAC) agent to model state propagation. In doing so, I demostrate the use of an RL agent for planning and the use of Q-fuctions to encourage safer long-horizon plans.

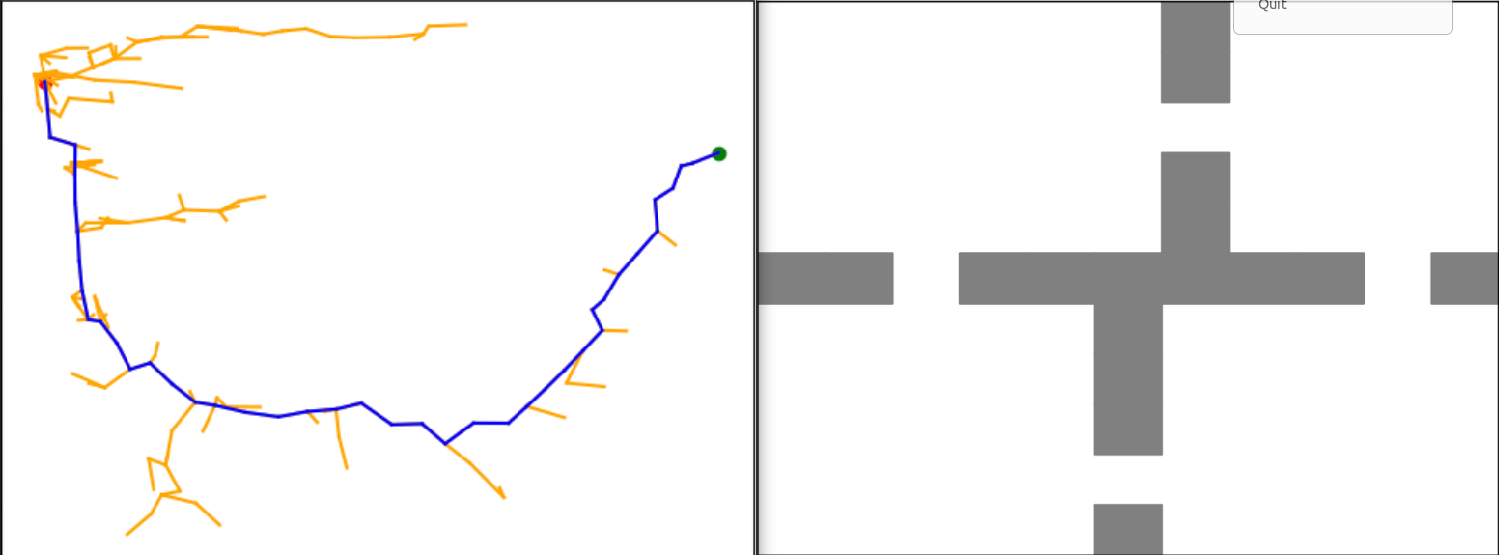

The image shows the paths explored by the RRT that utilizes a hierarchical-actor-critic agents' policy to predict take steps, and the agents' Q-functions to detrmine the quality of the predicted path. Different levels of the hierarchy weigh on the path's quality to explore and produce more promising paths.